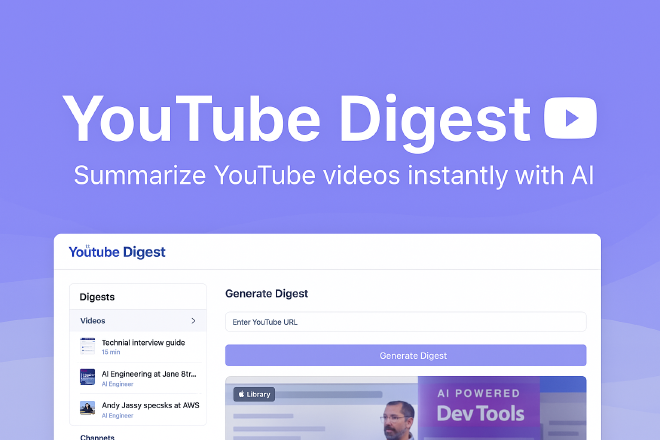

YouTube Digest – Containerized AI Summarization Pipeline

Table of Contents

Overview #

YouTube Digest is a Docker‑ready web app that turns any YouTube URL into a chapter‑aware, AI‑generated summary in under 30 seconds. The backend pulls the transcript with yt‑dlp, feeds it to OpenAI’s o3‑mini, then serves up a concise digest with clickable chapters via a modern Next.js UI.

✨ Key Features #

- 🔄 Transcript Processing via

yt-dlp - 🤖 AI‑Powered Summaries (OpenAI o3‑mini)

- ⏱️ Chapter & Timestamp Navigation

- 🗄️ Persistent Storage in PostgreSQL

- 📦 Spin up everything with one

docker-compose up

Technical Design Benefits #

The asynchronous architecture of this pipeline brings several key benefits:

- Responsiveness: Immediate user feedback and fast initial validations prevent frontend bottlenecks.

- Scalability: Decoupled background tasks allow independent scaling, handling increased load without system degradation.

- Resilience: Errors in transcript fetching or AI summarization don’t affect core API availability or user interaction; tasks can retry or fail gracefully.

- Cost Efficiency: Persistent storage of transcripts and summaries prevents redundant API calls, reducing operational costs.

Core Technology Stack #

The application utilizes a carefully selected, modern stack optimized for asynchronous processing, scalability, and a responsive user experience:

| Layer | Technology | Rationale & Role in Pipeline |

|---|---|---|

| Frontend | Next.js / TypeScript / Tailwind CSS / Shadcn UI / React Query | Provides a type-safe, modern UI built with Next.js. Styled using Tailwind CSS utilities and Shadcn UI components. Efficiently manages API data fetching, caching, and status polling with React Query. |

| API Server | FastAPI (Python) | Offers robust, high-performance APIs with built-in async support, automatic documentation, and straightforward task queueing integration. |

| Background Workers | FastAPI Background Tasks (potential upgrade: Celery) | Enables efficient, asynchronous handling of intensive tasks (transcript fetching, AI summarization) without blocking the main request thread, maintaining rapid frontend responses. |

| Transcript Fetching | yt-dlp / TranscriptService | Extracts reliable metadata and transcript content from YouTube, providing foundational data for the summarization pipeline. |

| AI Summarization | OpenAI API (GPT Models, e.g., o3-mini) | Delivers accurate, concise, high-quality summaries from transcripts, providing the core user-value proposition of the application. |

| Database & Cache | PostgreSQL | Acts as persistent storage and caching layer, reducing redundant external API calls, improving responsiveness, and providing reliable structured data access. |

| Containerization | Docker / Docker Compose | Ensures environment consistency, simplifies setup and deployment, and isolates services clearly, facilitating scalability and easy maintenance. |

🚀 Conclusion & What’s Next #

YouTube Digest started as a fast experiment and quickly evolved into a modular, async-first AI tool with a strong architectural backbone. It’s a proof-of-concept that already solves a real problem, while quietly laying down infrastructure for much more.

Key architectural decisions—emphasizing background task processing, containerization, and strategic data persistence—ensure the system remains responsive, scalable, and cost-effective.

Next on the Roadmap: #

- ✅ Persistent digests ready for personalization.

- ⚙️ User dashboards for usage insights and cost tracking.

- 🔍 Library views for easy browsing and retrieval of past insights.

- 🤖 Plug-and-play upgrades as newer AI models emerge.

At its worst, YouTube Digest is already useful. But what’s exciting is that every improvement—from better models to smarter UX—is already built into the architecture.

🔎 Explore the Code #

If you’re curious how the pieces fit together—from task queues to digest storage to real-time polling—jump into the repo. Modular, containerized, and easy to run locally. Fork it, explore it, or build on top of it—it’s all there.

Github Repo